Introduction to TJES

This chapter describes the characteristics and components of TJES, discusses multi-node TJES, the start-up and shut-down of TJES, and the TJES system table.

1. Overview

OpenFrame TJES (Tmax Job Entry Subsystem, hereafter TJES) is the batch management module for the OpenFrame system and is equivalent to IBM JES. TJES is implemented through Tmax (TmaxSoft’s TP monitoring solution), enabling support for multi-node configuration and automatic error correction.

OpenFrame TJES is a batch processing solution that supports MVS JCL in Unix much like JES on an IBM mainframe. Moreover, through multi-node clustering using performance-proven middleware, TJES surpasses the limitations posed by the UNIX systems to provide great expandability. OpenFrame TJES is a solution that can rehost large-scale mainframe systems with greater stability.

TJES is responsible for the entire job flow that occurs in the OpenFrame environment from accepting jobs from the user through JCL, executing scheduling tasks after accepting jobs in accordance with the given resources through the Runner, outputting/printing the results of job execution, through to providing processing details of jobs.

TJES is responsible for:

-

Receiving jobs submitted through JCL

-

Supporting MVS JCL from IBM mainframes

-

Supporting third-party schedulers such as CONTROL-M and A-AUTO

-

Supporting an internal reader

-

Supporting an external writer

-

-

Scheduling submitted jobs

-

Scheduling by job class and priority

-

Supporting multi-node scheduling

-

-

Processing job output

-

Supporting INFOPRINT (supports IBM printing format)

-

This user guide covers job management/execution steps, spool management, and output processing steps. It also covers other aspects of operating TJES including logging, error troubleshooting and the configuration of UNIX environment variables.

2. Characteristics

TJES allows users to not only run mainframe batch code (Utility, COBOL, PL/I) but also enables the execution of code that can run natively in a Unix environment, such as Unix shell scripts or C programs. Processing batch jobs using Unix shell scripts and cron jobs can often be complex due to the lack of systemized job scheduling and a lack of resource management.

TJES provides a systemized batch system with the following features.

-

Scheduling by job class and Runner class

-

Management of the number of concurrently run batch jobs by setting the number of available Runner slots

-

Confirmation of job progress and processing status/results

-

Control of job status: property-change/pause/resume/suspend

-

Output management

-

Guaranteed data integrity through data set locks

-

Improved security using TACF

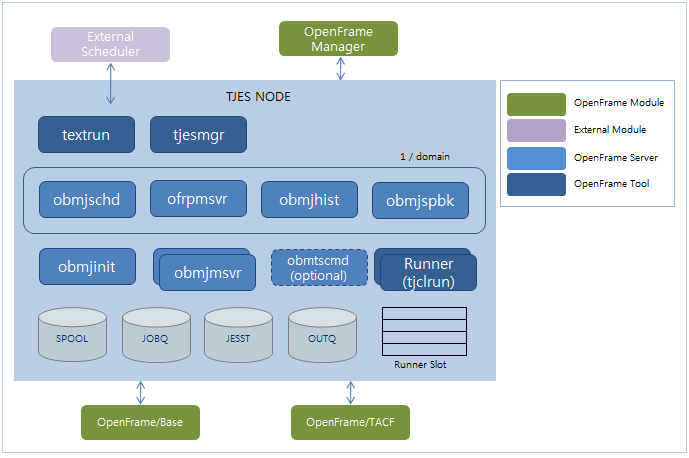

3. Architecture

The following are the TJES components.

3.1. TJES Components

-

obmjschd

Job scheduler. Only one instance of obmjschd runs for the entire domain. It provides job scheduling, generates JOB IDs and manages the boot status of each node.

-

ofrpmsvr

Print server. Only one instance is run per domain. It handles the printing of OUTPUT registered in OUTPUTQ using the currently configured printer.

-

obmjhist

Job history server. Only one instance of obmjhist is run for the entire domain. It logs all activities that alter a job’s state.

-

obmjmsvr

Job management server. It is responsible for job output management and job queries.

-

obmjspbk

SPOOL backup server. It is run on one node per domain. It is responsible for backing up the spool of a job that has been terminated and removed, allowing terminated jobs to be queried. All backups can be queried in the same format.

-

obmjinit

Server for managing the Runner and Runner slots. Each node runs one instance of obmjinit. It handles the runner and runner slots allocated for the node on which it is operated, and is also responsible for assigning jobs to its respective node.

If obmjinit is restarted because a Tmax failover occurs, it changes the status of JOBs in the WORKING or SUSPEND status to the STOP status and forcibly ends the runner processes. If obmjinit is restarted manually by a user, it changes the status but does not end the runner processes because the following are not clear: the point in time when it is restarted and the runner’s PID.

-

obmtscmd (optional)

Server for processing TSO commands described in CLIST when performing CLIST through ISPF service.

It is a server provided only in the environment using ISPF service. Since it exchanges data with ISPF service in JSON format, jansson (jansson.h, libjansson.so) must be installed in Linux environment.

-

tjclrun

Module responsible for running JCL code. It runs JCL JOBs specified by STEP order.

3.2. TJES Component DB Session

Certain TJES servers utilize databases for managing system metadata and handling dataset I/O operations. As such, these servers maintain specific database sessions to support these functionalities.

The table below lists the database sessions used for these purposes:

| No | Session Client | Session Count | Session Type | Purpose |

|---|---|---|---|---|

1 |

obmjinit |

1 |

ODBC |

OpenFrame metadata |

2 |

obmjspbk |

1 |

ODBC |

OpenFrame metadata |

3 |

obmjschd |

1 |

ODBC |

OpenFrame metadata |

4 |

obmjmsvr |

1 |

ODBC |

OpenFrame metadata |

5 |

ofrpmsvr |

1 |

ODBC |

OpenFrame metadata |

6 |

obmtsmgr |

1 |

ODBC |

OpenFrame metadata |

7 |

tjclrun (increases during job execution) |

1 |

ODBC |

OpenFrame metadata, VSAM (up to 2) [NOTE] The number of active sessions may increase depending on the programs executed via STEP or the databases accessed through UTIL. |

3.3. Other OpenFrame Products

-

OpenFrame Base

OpenFrame Base is an essential product to start OpenFrame Batch, OSC, and OpenFrame Manager. OpenFrame Base supports data sets such as NVSM and TSAM, and manages catalogs and data set locks.

For more information about OpenFrame Base, refer to OpenFrame Base Guide.

-

OpenFrame TACF

TACF manages OpenFrame security. OpenFrame TJES should be integrated with OpenFrame TACF for strengthened security such as user authentication and authority check over resources such as data sets and job names.

For more information about OpenFrame TACF, refer to OpenFrame TACF Administrator’s Guide.

3.4. Resources Used by TJES

-

Runner Slot

Stores the Runner’s operating information. It is used as the data exchange window between the Runner and obmjinit. Each Runner gets allocated a Runner Slot. The Runner Slot is created when obmjinit is in normal operation state and removed when obmjinit is terminated. It is implemented as a system table, OFM_BATCH_RUNNER.

-

Spool

Reserved data set required by TJES. It logs resources needed for running jobs or the progress and results of executed jobs. The spool creates a folder using the JOBID (assigned at the time the job is submitted) of the current job as the folder name. The job’s spool entry is managed as spool directory’s member or a system table, according to the configurations. The job spool is deleted by the REMOVE or CANCEL commands.

-

TJES system tables

Used by TJES for storing internal information. JOBQ, JESST and OUTPUTQ are TJES system tables. For more information, refer to TJES System Tables.

3.5. Interface

-

textrun

Module that allows third-party schedulers to submit a job to TJES and to monitor the progress/results of the job. Third-party schedulers regard the job generation/termination as the generation/termination of a process. Therefore, the textrun module runs until the submitted job is terminated. textrun is terminated after the job has been terminated and the JOB processing results are returned.

-

tjesmgr

Command line user interface for system administration. All TJES functions, including the BOOT and SHUTDOWN commands, can be used from this command line interface.

-

[Batch] menu of OpenFrame Manager

GUI-based user interface for general users. It provides job submission and query functionality but does not provide administrative functionalities such as the booting or shutting down of TJES.

3.6. External Products

-

External Scheduler

TJES provides class and priority scheduling only and does not provide inter-job scheduling such as running job A prior to running job B. For any inter-job scheduling or automated scheduling for daily, weekly, or monthly job submissions, external schedulers, such as Control-m and A-Auto, must be used.

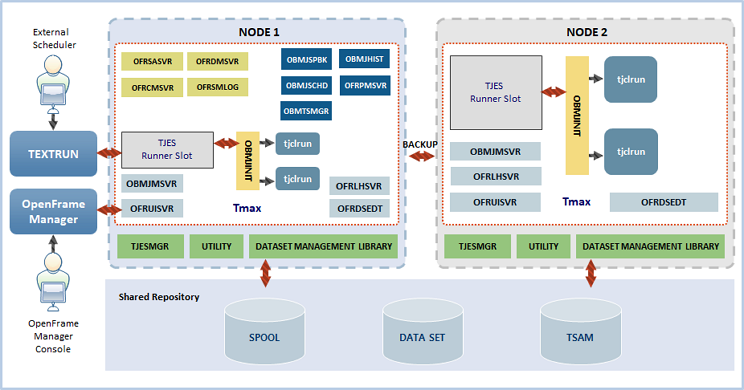

4. Multi-node TJES Architecture

TJES provides a multi-node TJES environment that enables multiple Unix machines to be used as a single machine, enhancing performance and availability. This section describes the architectural configuration and function of each component when setting up TJES for multi-node configuration.

The following is a two-node TJES configuration.

In order to set up TJES in a multi-node configuration, data storage components such as spool, data sets, and TSAM must be shared. TJES uses shared resources to access a common data set from different nodes and retrieve job results from different nodes. Effectively, such tasks are run from a single image.

The servers (ofrsasvr, ofrpmsvr, obmjschd, obmjhist, obmjspbk, and obmtsmgr) located only in NODE1 are Tmax servers of which only a single instance is run per domain. If NODE1 encounters a problem due to a failover, tmax automatically invokes and runs the servers from NODE2 to ensure uninterrupted operation.

Tmax servers (obmjmsvr, ofrdsedt, ofrlhsvr, ofruisvr, and obmjinit) located in both NODE1 and NODE2 can have several instances running on each node to provide multi-node services. obmjinit has one instance running per node.

tjclrun is a process that is run on-demand. One tjclrun instance is needed per job, and no instance of tjclrun can exist if there are no jobs running on the node. The TJES Runner slot, which is used as the information delivery window between obmjinit and tjclrun, is created when obmjinit is in normal operation and deleted when obmjinit is terminated.

textrun, OpenFrame Manager and tjesmgr fall under external U/I and can be connected to any node through Tmax.

5. TJES Boot and Down

During the boot state, TJES is able to process jobs. While in the down state TJES is running but does not process jobs. The system administrator can initiate BOOT and SHUTDOWN commands through the Runner slots from tjesmgr.

|

For more information about the usage, refer to BOOT and SHUTDOWN in INITIATOR Commands. |

5.1. Boot

The BOOT command only functions when TJES is in a down state. If the system is already in a boot state, the BOOT command is ignored. Boot brings up each TJES node to a state where the Runner slot condition can be used on each node, then reports to obmjschd about the current state of each node’s Runner slot to allow job scheduling to proceed.

Once TJES has been successfully booted, it is recorded in the OFM_BATCH_NODEST system table that the node has been successfully booted. This record aids the TJES automatic recovery procedure by allowing the nodes that are already in a boot state to maintain their state throughout the recovery procedure in the event of a problem such as a DB connection failure.

In a multi-node TJES environment the BOOT command is sent to all nodes within the domain when TJES needs to be booted. However, there are commands that allow each node to be booted separately. When a node is booted, the Runner slot state of the node is set to active or inactive depending on what it was set to before the last down state. If the node has not been booted, the boot proceeds as it is stated in the INITDEF section of the tjes subject.

5.2. WarmBoot and ColdBoot

-

WarmBoot

Warm boot is a general boot that can be used after the system has already been initialized. It initializes the TJES shared memory and reports to the scheduler about the current state of the Runner slot so that scheduling can proceed. Warm boot can be invoked using the BOOT command from tjesmgr.

-

ColdBoot

Cold boot is a special booting procedure that initializes OpenFrame TJES. The system table is initialized using the tools from tjesinit. In order to process jobs, a warm boot is necessary.

The following cases require a cold boot.

-

After installing OpenFrame TJES

-

After making a major upgrade such as a change to system table data

-

After making the following changes to the system within the tjes subject

-

Changing the JOBNUM range in the JOBDEF section

-

Changing the JOBCLASS section

-

Changing the OUTNUM range in the OUTDEF section

-

-

|

For more information about the tjesinit tool, refer to "tjesinit" in OpenFrame Batch Tool Reference Guide. |

5.3. Shutdown

Shutdown only functions when obmjinit has been booted, and if it is already in the down state the SHUTDOWN command is ignored. Additional job scheduling can be prevented by changing the TJES node state to 'Not Booted' in the desired nodes.

The jobs that were already scheduled before entering the down state are run until they have completed under normal conditions, but if the user forces a shutdown, all jobs are terminated immediately.

To terminate all jobs and then shut down the system, use the STOP command to terminate the jobs and then the SHUTDOWN command to terminate the system process.

If TJES is successfully shut down, a log entry is made to the OFM_BATCH_NODEST system table for the corresponding node. This information is used later to keep the node in a shutdown state throughout the automatic recovery procedure that could occur in the event of a problem such as a DB connection failure.

In a multi-node TJES environment, the SHUTDOWN command is sent to all nodes inside the domain when TJES needs to be shut down. However, this command can also be passed to only specific nodes.

-

Command to shutdown the entire system.

shutdown

-

Command to shutdown a specific node.

shutdown node=nodename

When the SHUTDOWN command is received, the state of the Runner slot in the corresponding node is by default changed to a 'Down' state. If a job is being processed at the time the SHUTDOWN command is received, the state of the Runner slot is kept as 'Working' until the job processing is completed. Once the job has finished, the Runner slot state is changed to 'Down'.

6. TJES System Tables

TJES system settings and resource information are stored in system tables that all nodes can access. These reserved system tables are described in the following.

6.1. JESST

JESST’s three system tables store TJES system-level information described in the following.

| System-level Information | Description |

|---|---|

Node Information |

Information about all nodes in the system and their current boot state. This information is stored in the OFM_BATCH_NODEST system table. |

JOBQ Information |

General information about JOBQ, such as the range of JOBIDs. This information is stored in the OFM_BATCH_JOBQ system table. |

OUTPUTQ Information |

General information about OUTPUTQ, such as the size of OUTPUTQ. This information is stored in the OFM_BATCH_OUTPUTQ system table. |

JOB CLASS Information |

Default property information for each job class. This information is stored in the OFM_BATCH_JCLSST system table. |

|

6.2. JOBQ

JOBQ is a system table that stores information TJES needs for managing jobs. JOBID, JOBNAME, JOBCLASS, JOBPRTY, JOBSTATUS, JCLPATH, and USER ACCOUNT are all stored in the JOBQ data set. A default index for JOBID is available.

|

The actual table name for JOBQ is OFM_BATCH_JOBQ. This table must be installed when installing OpenFrame. |

6.3. OUTPUTQ

OUTPUTQ system table stores information required for managing outputs, which are the results of jobs executed by TJES.

The system tables are created during OpenFrame installation, and must be initialized prior to tmboot by using tjesinit, which is a Unix tool for coldboot provided by OpenFrame.

|

The actual name of OUTPUTQ table is OFM_BATCH_OUTPUTQ, which must be installed during OpenFrame installation. Each system table is created by using the batchinit tool. For more information about how to run batchinit, refer to "batchinit" in OpenFrame Batch Tool Reference Guide. |