Introduction to Tmax

This chapter describes the concepts, architecture, features, and considerations for adopting Tmax.

1. Overview

Tmax stands for Transaction Maximization, which means the maximization of the transaction handling ability. Tmax is a TP-Monitor product that distributes load while ensuring reliable transaction processing across heterogeneous systems in a distributed environment, and takes appropriate action when errors occur.

Tmax provides an efficient development environment with optimized solutions used in the client/server environment. It also improves performance and handles all failures.

Tmax complies with the X/Open DTP (Distributed Transaction Processing) model, the international standard for distributed transaction processing. It was developed in accordance with the OSI (Open Systems Interconnection) group’s functional distribution of DTP services, as well as the defined APIs and interfaces among system components standards created by the international standard organization OSI (Open Systems Interconnection group). In addition, Tmax provides transparent application processing across heterogeneous systems in a distributed environment, supports OLTP (On-Line Transaction Processing), and satisfies the ACID (Atomic, Consistent, Isolated, Durable: Transaction Properties) properties.

Tmax maximizes performance through transparent application processing and provides an optimized development environment by reliably handling mission-critical legacy applications. Tmax also ensures system reliability by managing load and preventing failures in critical systems that process large volumes of transactions across industries such as finance, public services, telecommunications, manufacturing, and distribution. It is utilized for large-scale OLTP application development and a variety of services, such as banking online services, credit card authorization, and customer and sales management, across diverse industries including airlines, hotels, hospitals, and defense sector.

|

For more information about transactions, refer to Transaction in Tmax Application Development Guide. |

2. Tmax Architecture

2.1. System Configuration

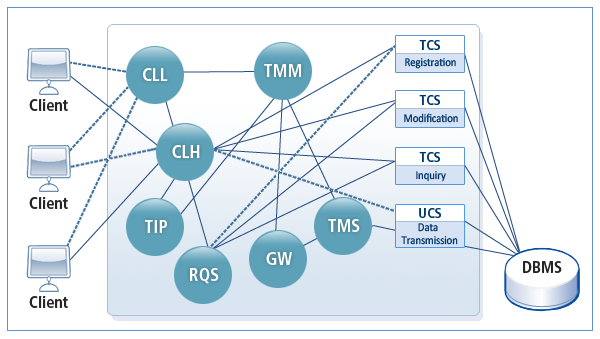

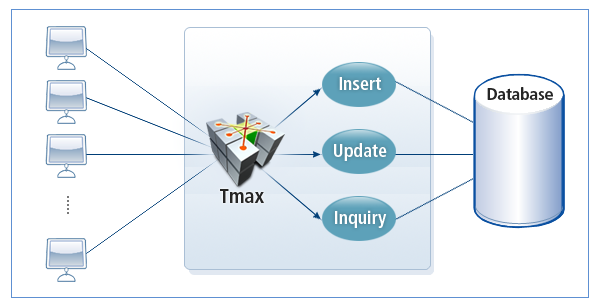

The following figure illustrates the system configuration of Tmax.

-

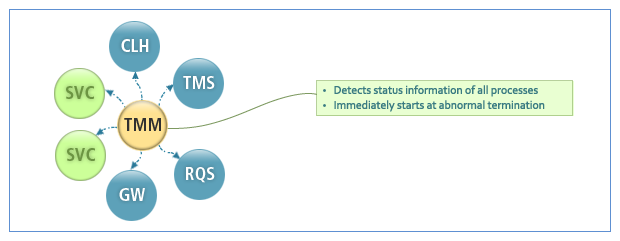

TMM (Tmax Manager)

A core process that operates and manages the Tmax system. TMM manages all shared information in the Tmax system and the following server processes: CLL (Client Listener), CLH (Client Handler), TMS (Transaction Management Server), and AP (Application Program).

The following are the major features of TMM:

Key Feature Description Shared Memory Allocation

When configured environment information is compiled with the cfl command, a binary file is created. TMM loads the binary file into shared memory and manages the Tmax system using the loaded information.

Process Management

TMM is the main process for the operation and termination of all systems.

Log Management

TMM manages Tmax system logs (slog) and user logs (ulog).

-

CLL (Client Listener)

A process for the connection between clients and Tmax. It receives requests from clients by setting PORT Listener for managing client connections.

-

CLH (Client Handler)

Relays between clients and servers, requests services from the server, connects to a server, and manages connections to the server.

It forwards client requests received through functions such as tpcall to the corresponding server, and relays XID generation and commit/rollback requests in an XA service environment.

-

TMS (Transaction Management Server)

Manages databases and handles distributed transactions while operating in a database-related system. Delivers commit/rollback requests of XA services to the RM (Resource Manager).

-

TLM (Transaction Log Manager )

Saves transaction logs in tlog before CLH commits when a transaction occurs.

-

RQS (Reliable Queue Server)

Manages the disk queue of the Tmax system and executes reads/writes from/to a file.

-

GW (Gateway Process)

Handles inter-domain communication in the environment in which multiple domains exist.

-

Tmadmin (Tmax Administrator)

Monitors Tmax-related information and manages changes in the configuration file.

-

RACD (Remote Access Control Daemon)

Remotely controls all domains in which Tmax is installed.

-

TCS (Tmax Control Server)

Handles business logic at the request of CLH and returns the results.

-

UCS (User Control Server)

Handles business logic at the request of CLH and returns the results. A corresponding process maintains control.

-

TIP (Tmax Information Provider)

Checks system environment and statistics information, and operates and manages the system. (boot/down only)

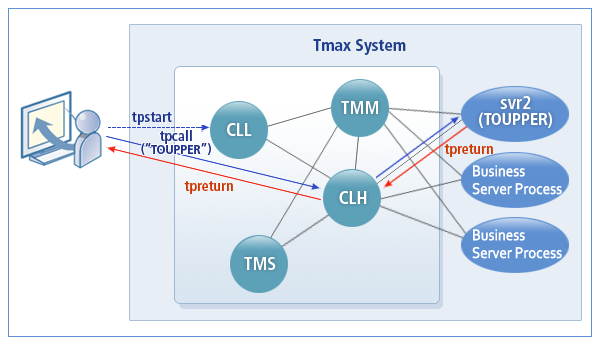

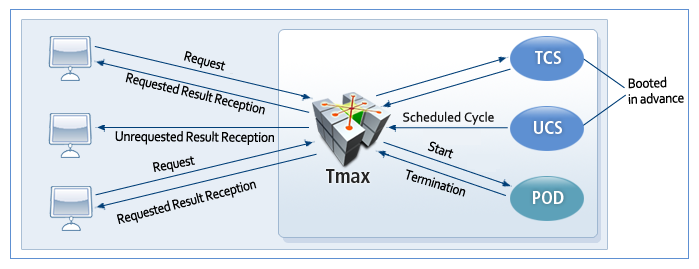

The following figure illustrates the services performed by the Tmax system.

-

When a client executes tpstart, the CLL process handles the connection request to connect to the CLH process.

-

If there is a service request, CLH handles all services.

-

CLH receives a client service request (tpcall), analyzes the service, and maps the service to the proper business server process (svr2).

-

The application server process (svr2) processes the service and then returns the result (tpreturn) to CLH.

-

CLH receives the result and sends it to the client.

2.2. TIM

TIM (Tmax Information MAP) is core information required to operate the Tmax system. TIM is created by the TMM process, and located in the shared memory managed by Tmax.

TIM can be divided into the following according to the role:

-

Tmax System Configuration Information

Loads the Tmax configuration file <tmconfig.m> into shared memory and references it as needed.

-

Tmax System Operational Information

Manages information to operate the Tmax system. The information includes the following: how to respond to a failure that occurs in the system, base information for load balancing, naming service information to access each service if a system consists of multiple pieces of equipment, and application location.

-

Application Status Information

Manages the status (Ready, Not Ready, Running, etc.) of application processes loaded in a system.

-

Distributed Transaction Information

Serving as a relay for communication with RM, it manages database operational information and sequence information for transaction processing.

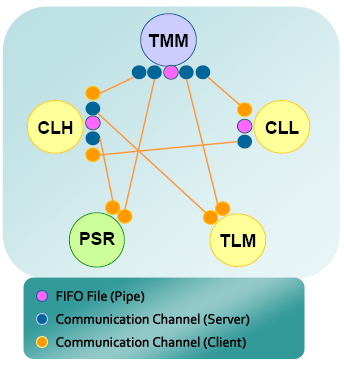

2.3. Socket Communication

Tmax uses the UNIX domain socket communication method. This method uses the socket API without any changes, and enables communication between processes by using a file. It is more stable and faster than an external communication method based on ports. Both this method and FIFO (Named Pipe) use a file and manage messages from kernels. However, the socket communication method has a two-way communication feature and unlike FIFO, it is easy to build multiple client/server environments.

The following figure shows the process of UNIX domain socket communication of CLL, CLH, and TLM. The application server (PSR) connects to CLL, CLH, and TLM

3. Features of Tmax

Tmax has the following features:

-

Process Management

To provide an optimized environment, Tmax adjusts the number of the server’s application handling processes created for each client. Tmax provides a 3-tier based client/server environment.

-

Transaction Management

Tmax guarantees data integrity by supporting Two-Phase Commit (2PC) for distributed transactions. Tmax also facilitates the use of global transactions by providing simple functions such as tx_begin, tx_commit and tx_rollback. It also improves efficiency with the transaction manager that uses multi threads, and guarantees stability with recovery/rollback by rapidly responding to errors with dynamic logging. Transactions can be easily scheduled and managed because all transactions are centrally managed.

-

Load Balancing

Tmax provides load control through the following 3 methods, increasing throughput and reducing processing time.

-

System Load Management (SLM)

-

Data Dependent Routing (DDR)

-

Dynamic Load Management (DLM)

-

-

Failure Handling

Tmax can operate normally by using failover through load balancing and service backup even when a hardware failure occurs. Even if a software failure occurs due to a down server process, services are provided continuously.

-

Naming Service

Naming service provides service location information within distributed systems by providing transparency and a name for easy service calls.

-

Process Control

Tmax provides three methods of data transmission processes. For more information, refer to Process Control.

-

Tmax Control Server (TCS)

-

UCS(User Control Server (UCS)

-

Processing On Demand (POD)

-

-

Reliable Queue (RQ)

Data is preserved and reliable processing is ensured through disk queue processing that prevents the loss of requests due to failures.

-

Security Features

Tmax provides data protection based on the Diffie-Hellman algorithm and supports the following 3-level security, including the security features provided by UNIX.

-

Level 1: System Access Authentication

A unique password is set for the entire Tmax system (domain). Only clients who registered the password can connect to the Tmax system.

-

Level 2: User Authentication

Tmax services can be used with user IDs registered in the Tmax system after authentication.

-

Level 3: Service Access Authentication

Services that require special security can be used by users who have the corresponding privileges. Tmax 4 and later versions support this.

For more information about security, refer to Security System in Tmax Application Development Guide.

-

-

Convenient APIs and Various Communication Methods

Tmax supports the following communication methods: Synchronous communication, Asynchronous communication, Conversational communication, Request Forwarding, Notify, and Broadcasting. Tmax provides convenient APIs for these methods.

-

System and Resource Management

-

The following statuses of the entire system can be monitored: process status, service queue status, number of processed services, and average service processing time. System status and queue management statistics can be analyzed and reported.

-

Integration of application and database management enables efficient resource management.

-

-

Multiple Domains and Various Gateway Services

Tmax enables data exchange across remote distributed systems, supports easy integration between systems on different platforms, and provides various gateway modules, including SNA CICS, SNA IMS, TCP CICS, TCP IMS, and OSI TP. It also provides inter-domain transaction service processing and routing functions.

-

Various Client Agents

Tmax provides various agents that enable easy migration from a 2-tier system into a 3-tier system.

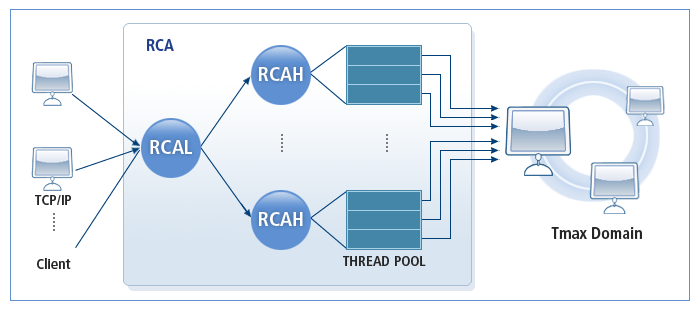

Classification Description Raw Client Agent (RCA)

Supports multiple ports that efficiently handle processes with the multi-threading method.

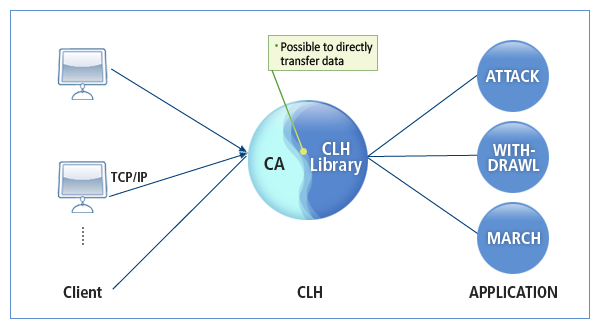

Simple Client Agent (SCA)

Supports multiple ports that can handle both non-Tmax and Tmax clients.

-

Various Development Methods

Classification Description Real Data Processor (RDP)

Supports direct data delivery using UDP communication data, not via the Tmax system.

Window Control

Provides WinTmax client library for multi-window configuration and enables concurrent handling of multiple data streams.

-

Extensibility

-

Integration with the Web

If the client/server environment and the web environment are integrated using Java Applet/Servlet, PHP, etc., fast response time can be ensured and system performance can be improved. To facilitate service integration, Tmax provides WebT. For more information about WebT, refer to Tmax WebT User Guide.

-

Integration with Mainframe

Host-link enables access to application services in a legacy system, such as IBM mainframe, like those in the client/server environment.

-

Easy Migration from Other Middleware

Systems developed with other middleware, such as Tuxedo, TopEnd, and Entera, can be easily migrated to Tmax without any changes to the source code. This provides improved performance, advanced technical support, and cost savings.

-

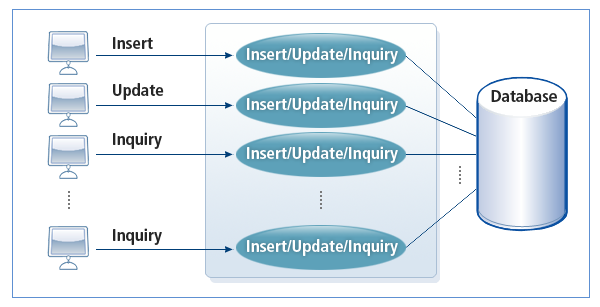

3.1. Process Management

The existing 2-tier client/server environment is the most general environment for developing applications. A single server process is created for a single client in this environment. As the number of clients increases, the number of server processes also increases. For this reason, it takes a long time to create processes and to open/close files and databases. Furthermore, since a server can be used only by a client who is connected to the server, usage of a server process is very low and the maintenance cost is very high.

To address these issues, a 3-tier client/server structure was adopted using TP-Monitor middleware. Tmax, a TP-Monitor product controls the number of created processes, schedules idle server processes, and operates optimal system through server process tuning.

The following figure illustrates the 2-tier client/server structure:

The following figure illustrates the 3-tier client/server structure:

Better systems can be built in the 3-tier environment compared to the 2-tier environment in terms of performance, extensibility, management, and failover.

The following are comparisons of 2-tier and 3-tier client/server environments.

-

Application Areas

2-tier Environment 3-tier Environment -

Unit-level tasks in small departments

-

Single server

-

Small number of users (approximately fewer than 50)

-

Batch processing tasks

-

Enterprise-wide tasks

-

Multiple servers

-

Large number of users (50 or more)

-

OLTP tasks an high-volume transaction processing

-

-

Advantages

2-tier Environment 3-tier Environment -

Reduced program development time (easier testing)

-

Lower initial implementation costs

-

Simplified program development

-

Modularized application development

-

Interoperability across heterogeneous hardware and database environments

-

Improved performance through optimal use of system resources

-

Easy extensibility

-

System management, load balancing, failover, and additional security features

-

-

Disadvantages

2-tier Environment 3-tier Environment -

Significant performance degradation with increasing transaction volumes

-

Cannot interoperate across heterogeneous hardware and database environments

-

Limited extensibility

-

Cannot implement system management, load balancing, failover, and additional security features.

-

Long application development time (client/server integration testing)

-

Increased initial implement costs

-

Separate client/serer development even for simple programs

-

3.2. Distributed Transaction

A transaction utilizes various resources by handling a single logical unit and maintains data integrity among distributed resources. A distributed transaction is a transaction among distributed systems in the network, and it must be meet the ACID (Atomicity, Consistency, Isolation, Durability) transaction properties. The Tmax system guarantees ACID for distributed transactions in heterogeneous DBMSs and multiple homogeneous DBMSs.

| Classification | Description |

|---|---|

Atomicity |

All-or-nothing proposition. All work in a transaction is performed, or nothing is performed. |

Consistency |

The successful result of a transaction must be maintained in a consistent status in shared resources. |

Isolation |

While a transaction is performed, another operation cannot be performed for the transaction. The result cannot be shared before it is committed. |

Durability |

The result of a transaction is always maintained after it is committed. |

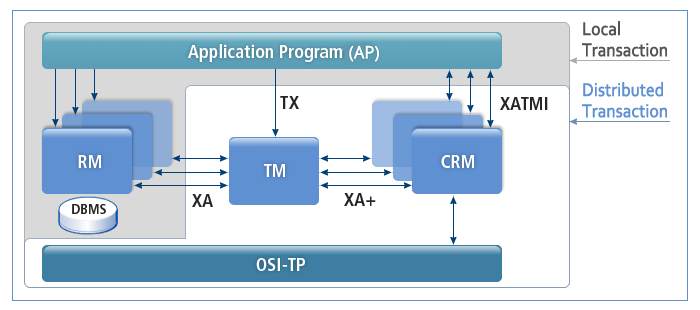

Tmax manages distributed transactions and complies with the X/Open DTP model that consists of AP (Application Program), TM (Transaction Manager), RM (Resource Manager), and CRM (Communication Resource Manager). Tmax supports the ATMI function, which is the set of standard functions that comply with the X/Open DTP model. Tmax binds and handles transactions that occur in a heterogeneous DBMS that complies with the X/Open DTP model.

The following figure illustrates the X/Open DTP structure.

-

Application Program (AP)

Provides the DT boundary (Distributed Transaction).

-

Resource Manager (RM)

Provides a feature to access resources such as a database.

-

Transaction Manager (TM)

Creates an ID for each DT, manages the progress, and provides a recovery feature for both completion and failure.

-

Communication Resource Manager (CRM)

Controls communication between distributed APs.

-

Open System Interconnection-Transaction Processing (OSI-TP)

Handles communication with a separate TM section.

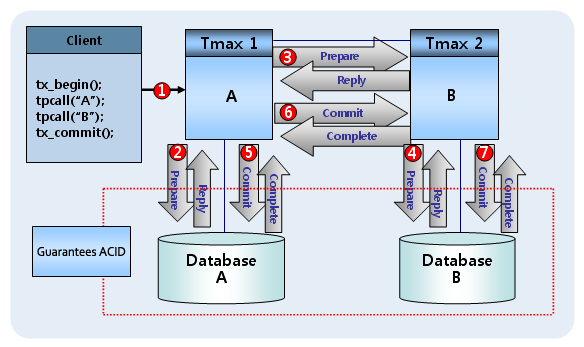

When distributed transactions are handled, 2PC (two-phase commit) is supported for data integrity and APIs are provided for global transactions. Distributed transactions are managed using multiple heterogeneous hardware platforms and databases in a physically distributed environment.

-

2PC (Two-phase commit) protocol

2PC is used to guarantee transaction properties for global transactions related to more than one homogeneous or heterogeneous database. 2PC refers to a two-phase process to fully ensure ACID properties when more than one database is integrated.

-

Phase 1: Prepare Phase

Checks whether all databases related to a transaction are prepared to handle the transaction. Once all databases are prepared, a signal is sent. This phase checks whether each database, network, or server combined with a single distributed transaction can commit or rollback, and prepares the databases.

-

Phase 2: Commit Phase

If all databases send normal signals, the transaction is committed. If any database sends an abnormal signal, a rollback is performed to complete the global transaction. In this phase, a commit message is sent to all nodes, and each node requests a commit with the RM. Data changes are completed when all nodes participating in the Commit Phase have finished their commits and notify the node that requested tx_commit() of the successful completion.

The following figure illustrates how the two-phase commit (2PC) process works.

2PC (Two-phase commit)

2PC (Two-phase commit) -

-

Global Transaction

Multiple heterogeneous hardware and databases are handled as a single logical unit (transaction). Global transactions involving DBMSs across two or more homogeneous or heterogeneous systems are managed through the two-phase commit, ensuring data integrity. The Tmax system supports global transactions by providing simple functions such as tx_begin, tx_commit, and tx_rollback. During global transaction processing, communication between nodes is handled through the client handler.

The following describes how global transactions operate.

-

Phase 1: Prepare Phase

In this phase, the node that initiated the distributed transaction (global coordinator) checks whether it is possible to perform commit or rollback on all participating nodes.

-

Phase 2: Commit Phase

Nodes participating in the distributed transaction commit the transaction after receiving a response from the global coordinator indicating that the distributed commit is prepared. If any node is not prepared, the transaction must be rolled back.

-

-

Recovery / Rollback

If a transaction fails, the contents of the currently used RM are restored to their previous state even if they have changed.

-

Centralized Transaction Management

Even if nodes are physically distant, transactions between nodes are centrally managed and controlled

-

Transaction Scheduling

Transactions are managed with concurrency control techniques based on priority.

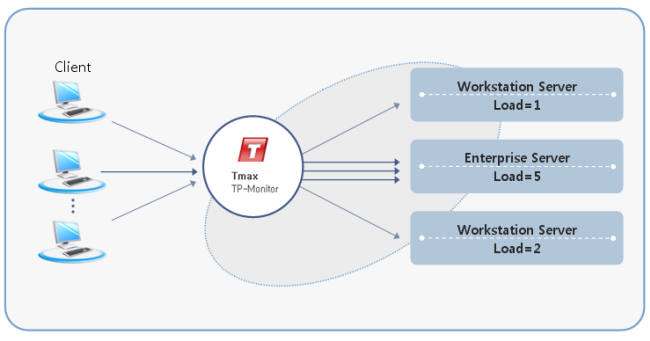

3.3. Load Balancing

Tmax provides the load balancing feature to increase throughput and reduce processing time by using the following 3 methods: SLM (System Load Management), DDR (Data Dependent Routing), and DLM (Dynamic Load Management).

Load Balancing Using SLM

SLM (System Load Management) uses a defined load ratio to distribute loads. The load value is set according to hardware performance. If the number of service requests of a node exceeds the load value, the service connection is switched to another node. The load value can be set for each node.

The following describes how SLM is operates in each process.

-

CLH receives the client’s request.

-

CLH determines using TIM whether it is an SLM service and checks the throughput of each server group.

-

CLH performs schedules the request to the appropriate server group.

The following figure illustrates load balancing using SLM. If the load values of Node 1, Node 2, and Node 3 are 1, 5, and 2, respectively, Node 1 handles 1 job, the next job is handled by Node 2, and the next job by Node 3. The next 3 consecutive jobs are handled by Node 2.

Load Balancing Using DDR

DDR (Data Dependent Routing) uses data values to distribute loads. If multiple nodes provide the same service, routing is possible among the nodes within the data range. Any entered field value is checked, and the service is requested from the appropriate server group.

The following describes how DDR is operates in each process.

-

CLH receives the client’s request.

-

CLH determines using TIM whether it is a DDR service and checks the classified field value.

-

CLH schedules the request to the defined server group.

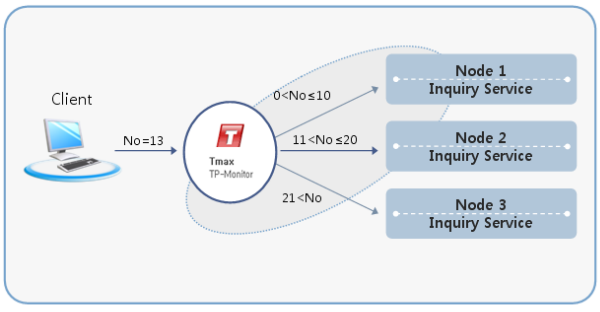

The following figure illustrates load balancing using DDR. In the figure below, the customer search service is processed by different nodes based on age: customers aged 0-10 in Node 1, customers aged 11-20 in Node 2, and all other ages in Node 3.

Load Balancing Using DLM

DLM (Dynamic Load Management) dynamically selects a handling group according to load ratio. If loads are concentrated at a certain node, Tmax distributes the loads with this method, which dynamically adjusts the load sizes. System loads can be checked with the queuing status of a running process.

The Tmax system manages a memory queue for each process and saves a request service to this queue if there currently is no process to be mapped. The number of transactions in the memory queue is the system load.

The following describes how DLM is operates in each process.

-

CLH receives the client’s request.

-

CLH determines using TIM whether it is a DLM service and checks the queue length for each server.

-

If CLH reaches the threshold, it schedules the request to the next server group.

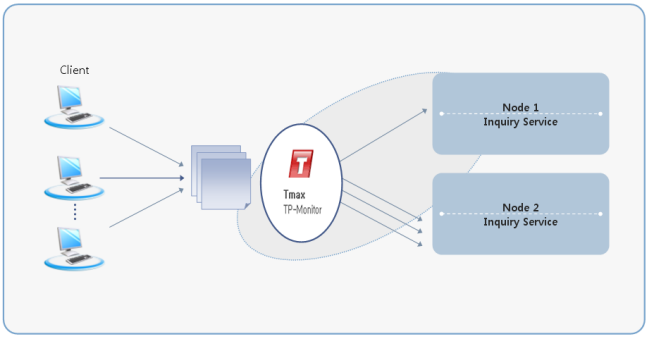

The following figure is an example of load balancing by DLM. In the figure below, it is assumed that Node 1 and Node 2 have the same services. If service requests are concentrated at Node 1, Tmax distributes the loads by using the dynamic distribution algorithm.

3.4. Failure Handling

Tmax ensures high availability of system resources by providing uninterrupted services even if a failure occurs in machines, networks, system, or server processes. Failures can be categorized as hardware or software failures.

Hardware Failure

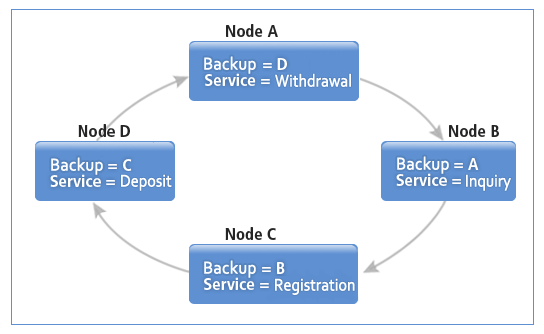

In the event of hardware failure, normal operation is possible through load balancing or service backup.

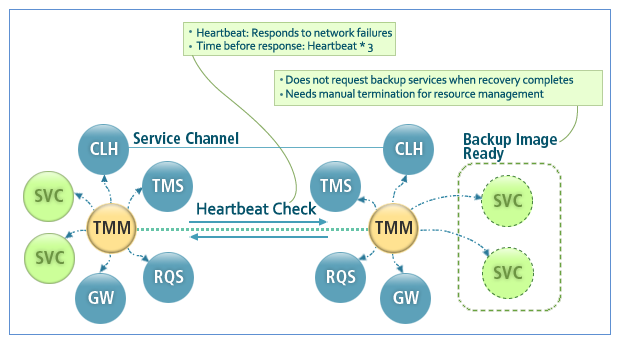

Since Tmax is a peer-to-peer system in which nodes monitor each other, failures can be handled immediately under the same conditions, regardless of the number of nodes.

Hardware failures can be addressed through the following two failure-handling methods.

-

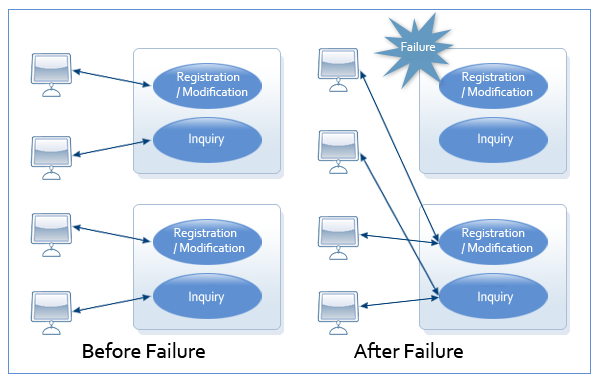

Load Balancing

In an environment in which a service is provided by multiple nodes, if a failure occurs in a node, another node provides the service without interruption. The client reconnects to a backup node and requests the service.

Failover by Load Balancing

Failover by Load Balancing -

Service Backup

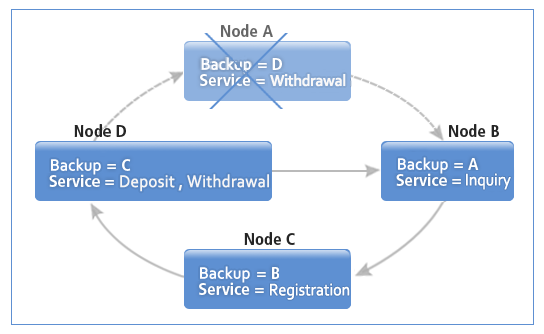

If a failure occurs in a node, another node runs a prepared backup process and handles the service.

-

Before a Failure (Normal Operation)

Failures in any node can be fully handled via a peer-to-peer architecture.

Failover by Service Backup - Before a Failure

Failover by Service Backup - Before a Failure -

After a Failure (Normal Operation)

The designated backup node provides uninterrupted service in the event of a failure.

Failover by Service Backup - After a Failure

Failover by Service Backup - After a Failure

-

Software Failure

If a server process terminates abnormally due to an internal software bug or a user error, it can automatically restart. Notice that if a system process, such as TMS, CAS, and CLH, restarts endlessly without any conditions and it is abnormally terminated, a running server process can be terminated together.

3.5. Naming Service

Tmax guarantees location transparency with a naming service, which enables easy service calls by providing service location information within distributed systems. Although a client does not know the server address, the user can get server information with a service name. The naming service makes programming easy because a service can be easily and clearly called and the desired service can be provided with only the service name.

3.6. Process Control

Tmax supports the following 3 server processes:

-

Tmax Control Server (TCS)

TCS is passively executed by a client request. It must be booted in advance to handle a request. TCS is the most typical method for handling a client request, receiving a caller request from a Tmax handler, handling the job, returning the result to Tmax, and waiting for another request.

-

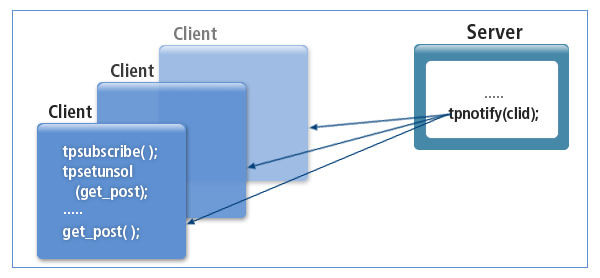

User Control Server (UCS)

UCS actively transfers data without a caller request. It is a unique feature of Tmax. It must be booted in advance to handle a request. It can periodically transfer data to a client without a request as well as handle a client request like TCS. I.e., UCS can handle client requests like TCS with added functions that can process applications actively and voluntarily.

-

Processing On Demand (POD)

POD is executed only when there is a client request and then terminated after handling the job. It is appropriate for seldom performed tasks.

|

For more information about how to control server processes, refer to Server Program in Tmax Application Development Guide. |

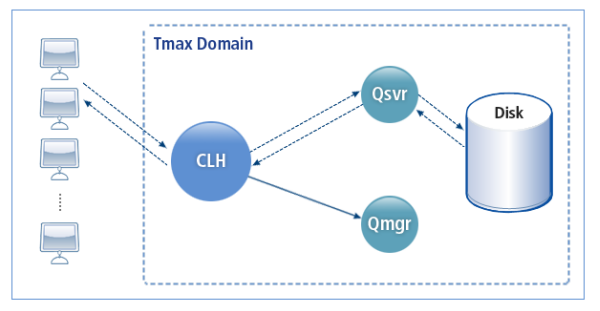

3.7. RQ Feature

RQ (Reliable Queue) enables a service to be handled reliably by preventing a request from being deleted due to a failure. A requested job is saved to disk and then handled, if the job requires a long period of time or needs reliability. Even if there is a system failure or other critical error, the job can be normally handled after system recovery.

Whether a requested service was saved correctly to disk can be checked with the return value of the tpenq() function. A queuing job does not affect other jobs because it is independently handled by Queue manager (Qmgr). Whether Qmgr successfully completes the job can be checked with the tpdeq() function.

3.8. Security Feature

Tmax provides a data protection feature based on the Diffie-Hellman algorithm and supports a 5-step security feature, which includes the UNIX security feature.

3.9. System and Resource Management

System Management

The following statuses of the entire system can be monitored: process status, service queuing status, the number of handled services, and the average time of handling services. System status and queue management system statistics can be analyzed and reported.

System management supports the following features:

-

Static System Management

The general system environment is set according to the user environment for the Tmax system components such as a domain, node, server group, server, service, etc.

-

Dynamic System Management

The following components can be changed while Tmax is running:

Component Description Domain

Changes the service timeout, transaction timeout, and node (machine) live check time.

Node

Sets the timeout for the message queue.

Server Group

Modifies the load values for each node and the load balancing method.

Server

Modifies the maximum queue count, server start count during queuing, server restart count, the number of servers, and server priority.

Service

Modifies the priority and timeout for each service.

-

Monitoring and Administration

-

The dynamic environment setting can be changed.

-

Various reports can be displayed and various statistical information is provided such as transaction throughput of a server, the number of handled jobs by service, average processing time, etc.

-

Resource Management

Resources are efficiently managed through integrated management of applications and databases.

In existing systems, resources are wasted because the whole system cannot be managed. Tmax manages applications using the centralized monitoring feature for the entire distributed system.

If homogeneous or heterogeneous databases are used together for a single application, Tmax integrates and manages them in the dimension of applications.

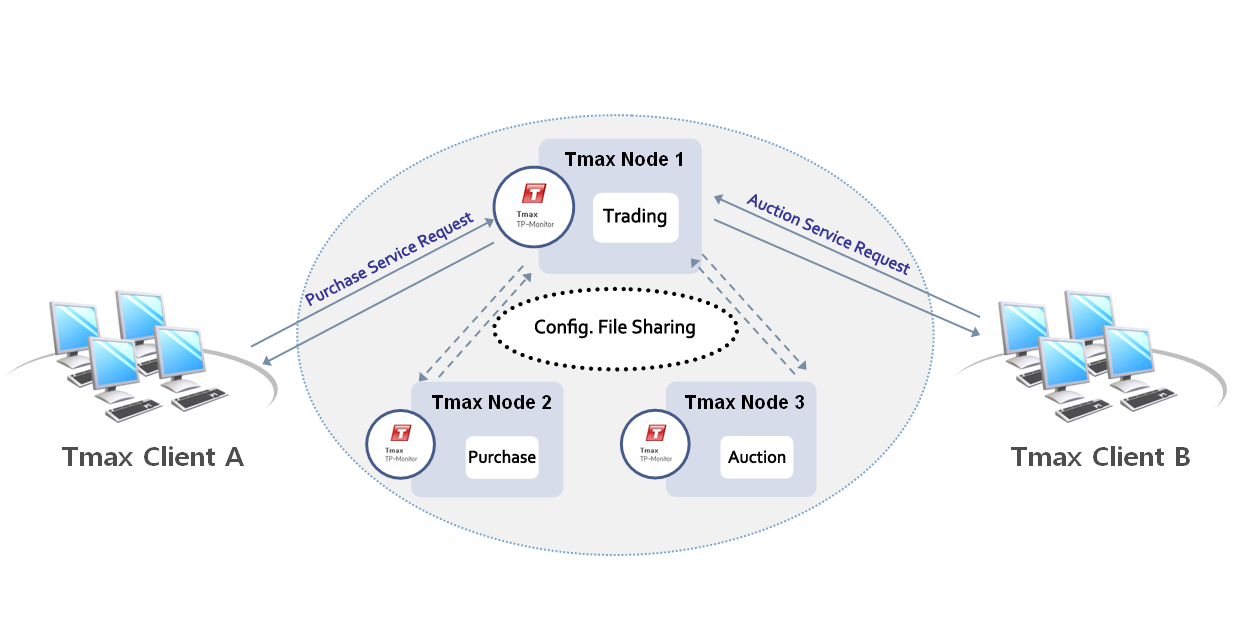

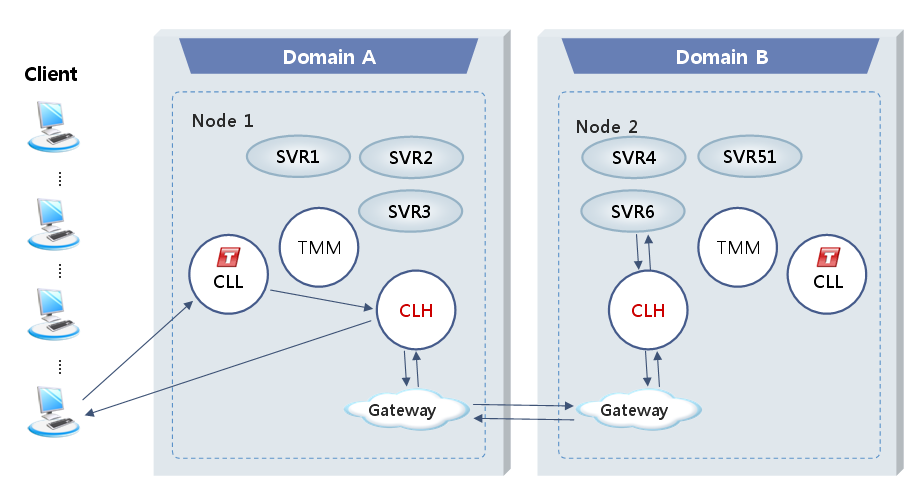

3.10. Multiple Domains and Various Gateway Services

The Tmax domain is the top level unit that is independently managed (started / terminated) by Tmax. Even if a system is distributed by region or application and it is managed in multiple domains, the domains can be integrated. Additionally, the multi-domain 2PC function is supported through a gateway.

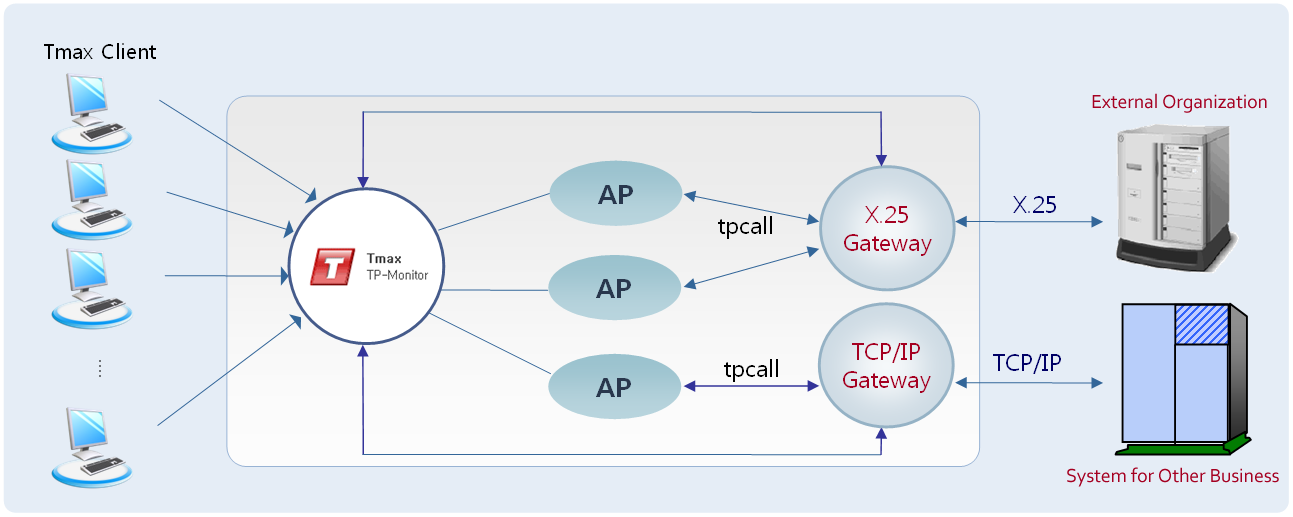

Remote distributed systems can exchange data, heterogeneous platform-based systems can be easily integrated, and various gateway modules, such as SNA CICS, SNA IMS, TCP CICS, TCP IMS, and OSI TP, are supported. It is possible to handle a transaction service and route it to multiple domains.

Multiple domains solve problems, such as difficulty in managing all nodes and the rapid increase of communication traffic between nodes, that may occur when multiple nodes are managed from a single domain. Furthermore, a requested service can be handled in any system. Service methods in a multiple domain environment are different according to service handling, routing, and transactions between multiple domains

The following figure shows the flow of calling a service in a multi-domain environment. Multiple domains are connected via gateways. A domain gateway acts as a server or client when connecting to another gateway. There is no start order for gateways.

Tmax provides various gateways, such as TCP/IP, X.25, and SNA, for easily integration with systems for other business and external organizations. A gateway enables efficient communication and provides convenient management by separating business logics and related modules. Since gateways are managed by Tmax, they can be automatically recovered after a failure and do not need to be managed by an administrator.

The following figure illustrates the role of a gateway. If a client requests a service, it receives the result from a domain, which provides the proper service. At this time, inter-domain routing is possible via a gateway according to transaction services or service values.

3.11. Various Client Agents

Tmax provides various agents to facilitate easy conversion from a 2-tier system to a 3-tier system.

RCA (Raw Client Agent)

RCA connects to an existing communication program, which cannot use the Tmax client library, by using the TCP/IP socket and enables the program to use services provided by the Tmax system. It supports services in REMOTE or LOCAL mode. A single client library like the existing Tmax client library can be used by a single client program. However, a single thread acts as a single Tmax client because RCA is developed using the multi-thread method. Up to 32 ports can be specified to support various clients.

RCA provides the reliable failover feature with RCAL, which controls user connections, and RCAH, which was created with user logic. It also supports administration tools such as rcastat and rcakill.

The following figure shows the RCA structure:

SCA (Simple Client Agent)

RCA connects to an existing communication program, which cannot use the Tmax client library, via TCP/IP and enables the program to use services provided by the Tmax system. It consists of a customizing routine and the CLH library, which is linked with CLH.

Jobs on the network, such as connect/disconnect to a client and data transmission/reception, are internally handled in the system. A developer can connect to a client by setting the client and the predefined port number in the Tmax configuration file; and change and complement the received data and data to be transferred.

Up to 8 ports can be specified to support various clients. The SCA module can directly transfer data to the CLH module and simultaneously handle both non-Tmax clients and Tmax clients. SCA provides services using a TCP/IP raw socket or the Tmax client library.

The following figure illustrates how to call a service using CA (Client Agent), a type of SCA.

3.12. Various Communication Methods

The client requests communication with the server using one of the following four methods:

-

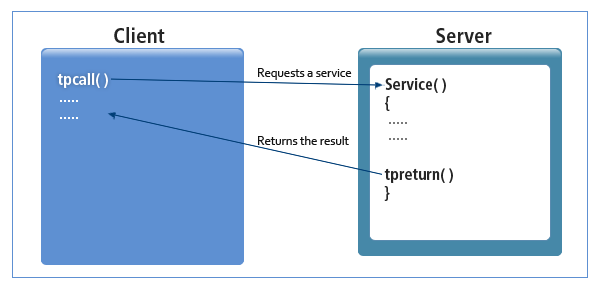

Synchronous Communication

The client sends a request to the server and waits while blocked until the reply is received.

Synchronous Communication

Synchronous Communication -

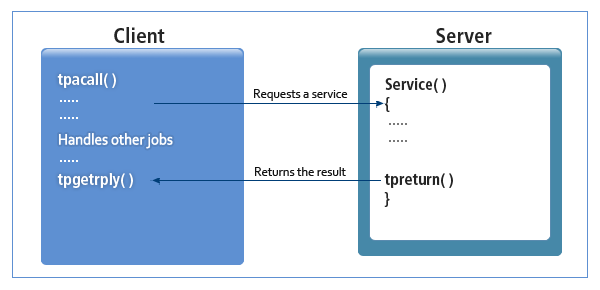

Asynchronous Communication

The client can perform other tasks after sending a request to the server, while waiting for the reply. The client can receive the reply using a function.

Asynchronous Communication

Asynchronous Communication -

Conversational Communication

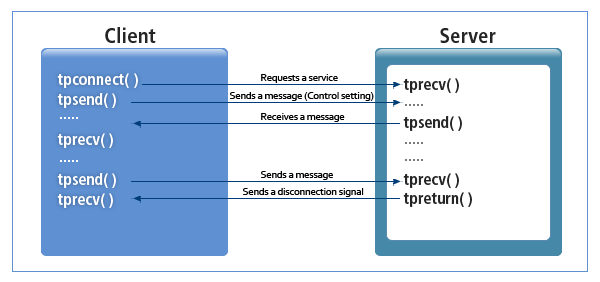

When the client wants to send a request to the server, it establishes a connection and sends the service request. When the client receives a message from the server, a conversational communication function is used. The client and the server send and receive messages by sending and receiving control through local communication. A control owner can send a message. When communication is made, a connection descriptor is returned. The returned connection descriptor is used to verify that the message was transferred.

Conversational Communication

Conversational Communication -

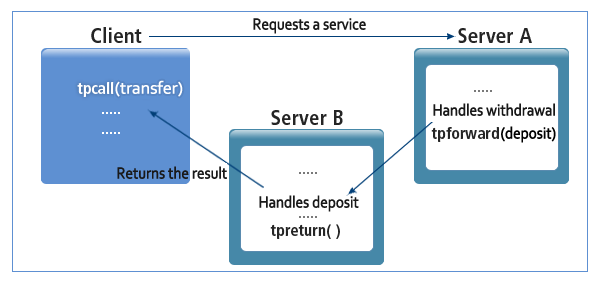

Forwarding Types

-

Type A

Type A improves the efficiency of each module by modularizing business logic and handling services step by step. Problems can be systematically analyzed and corrected. Synchronous and asynchronous communication can be used together.

Forwarding Type - Type A

Forwarding Type - Type A -

Type B

Type B integrates existing legacy systems like external organizations. A server process is not in blocking status in order to continuously handle any service requests received. This type supports a service that transfers a reply from a legacy system to a caller.

Forwarding Type - Type B

Forwarding Type - Type B

-

3.13. Various Development Methods

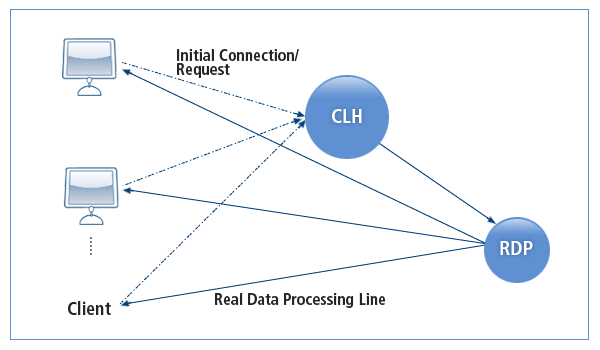

RDP (Real Data Processor)

Data can be directly transferred from RDP to a client instead of via CLH (Client Handler) to rapidly handle data that is continuously changing in real time. Therefore, RDP improves performance of the Tmax system by reducing the loads of CLH. It is supported only for the UDP data type, and it is configured as a form of UCS server process.

Window Control

Convenient libraries are provided for use in Windows-based client programs. Two types of libraries are provided: WinTmax and tmaxmt, and they both operate based on threads.

-

WinTmax

WinTmax is the Windows-based client library designed to support configuration of multiple windows.

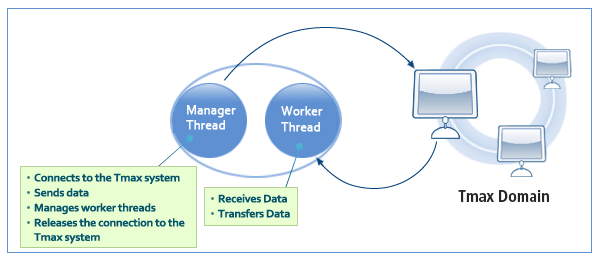

It consists of manager and worker threads. Since up to 256 windows can be set, it is useful to handle data that arrives simultaneously.

Classification Description Management Thread

Connects to the Tmax system, receives data, manages worker threads, and releases the connection to the Tmax system.

Worker Thread

Receives data and transfers data to a specific window.

The following figure shows the structure of the WinTmax library.

Structure and Features of the WinTmax Library

Structure and Features of the WinTmax Library -

tmaxmt

It enables a client program to act as threads. A developer must develop a program using threads. Each thread sends and receives data using specified functions such as WinTmaxAcall() and WinTmaxAcall2(). Each function internally creates threads, calls a service, and transfers the result to a specified window or function.

-

WinTmaxAcall sends data to a specified window using SendMessage.

-

WinTmaxAcall2 sends data to a specified callback function.

-

3.14. Reliable Message Transfer

Tmax reliably transfers messages using HMS (Hybrid Messaging System). Tmax HMS has the following characteristics:

-

Hybrid Architecture

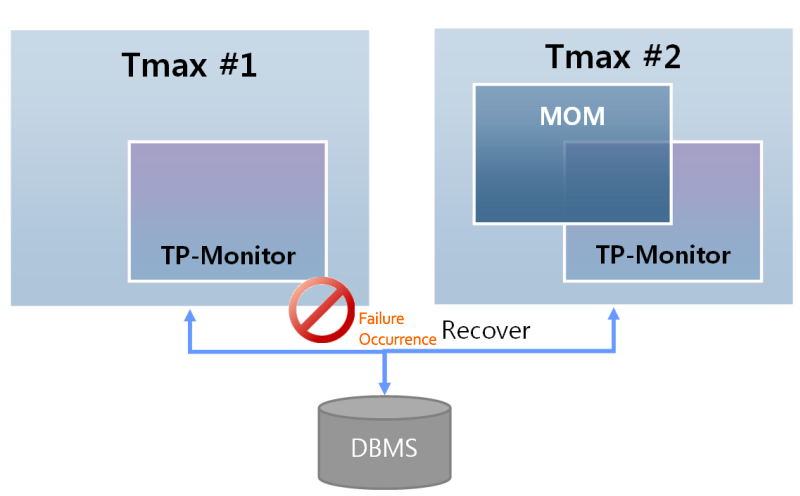

TP-Monitor (Tmax) and MOM (Messaging Oriented Middleware) exist together. 2PC is supported between MOM and RM. Tmax HMS enables each module to be flexibly integrated with each other in order to provide MOM features. It has the structure in which TMS, TP-Monitor (basic feature of Tmax), and MOM exist together.

-

Reliable Failover

Uses DBMS to save persistent messages.

-

High availability

-

Responds to a failure by specifying a backup node. It guarantees reliable failover and message transfers by using storage. It uses DBMS to guarantee failover reliability and recovers a persistent message from the DBMS if a failure occurs.

-

Responds to a system failure by configuring HA (High Availability) of Active-Standby.

High Availability of HMS

High Availability of HMS

-

-

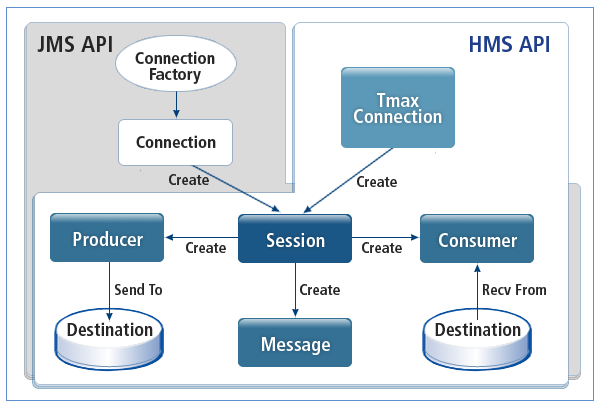

JMS Messaging Model Adoption

Adopts the JMS messaging model to support P2P and Publish/Subscribe, and provides C language APIs similar to the JMS specification for convenience.

API Compatibility of JMS

API Compatibility of JMS

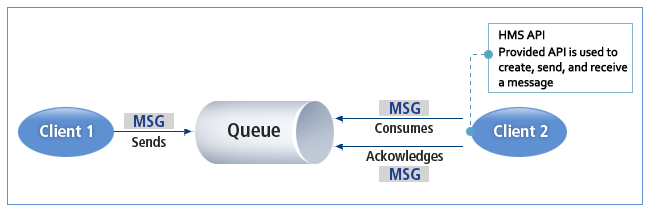

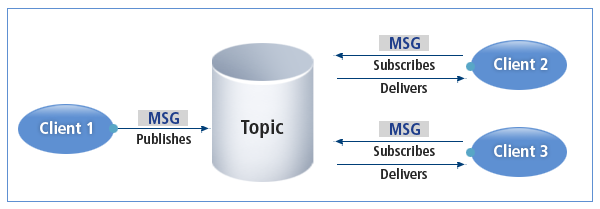

HMS, a Tmax feature, is the communication medium for a loosely coupled sender and receiver. It supports the Queue and Topic methods.

-

Queue Method (Point-to-Point)

Each message is transferred to a single consumer. There is no timing dependency between transmission and reception.

HMS-Queue Method

HMS-Queue Method -

Topic Method (Publish/Subscribe)

Each message is transferred to multiple consumers. Reliability is guaranteed by sending the message through a durable subscription.

HMS-Topic Method

HMS-Topic Method

|

For more information about HMS, refer to Tmax HMS User Guide. |

4. Characteristics of Tmax

Tmax adopts the peer-to-peer structure instead of the existing master/slave method. RACD (Remote Access Control Daemon) exists in each node. Tmax prevents Queue Full by using the stream pipe communication method. By blocking heavy transactions in network processes, the stream pipe communication method provides more reliable communication between processes than the message queue method. With these characteristics, Tmax minimizes the memory resource waste, supports rapid failover, and enables an administrator to actively respond to a failure.

The following are the characteristics of Tmax.

-

Complies 100% with various DTP international standards such as X/Open and ISO-TP

-

Complies with the X/Open DTP (Distributed Transaction Processing) model, the international standard for processing distributed transactions, and specifies a compatible API and system structure based on the application program (AP), transaction manager (TM), resource manager (RM), communication resource manager (CRM), etc.

-

Specifies the framework for the following: features of a DTP service specified by OSI (Open Systems Interconnection group), the international standard organization; API for the features; the feature for supporting distributed transactions; and the feature for adjusting multiple transactions that exist in a distributed open system.

-

Supports transparent business handling between heterogeneous systems in a distributed environment and OLTP (On-Line Transaction Processing).

-

-

Enhances the Efficiency of Protection and Multiplexing

Enhances the efficiency of protection and multiplexing by implementing IPC (Inter Process Communication) of the stream pipe method.

-

Provides various message types and communication types

-

Supports various message types such as integer, long, and character.

-

Supports various communication types such as synchronous communication, asynchronous communication, conversational communication, and forwarding type communication.

-

Supports FDL (Field Definition Language) and structure array.

-

-

Fault tolerance and failover

-

TP-Monitor with the peer-to-peer method

-

Handles H/W and S/W failures.

-

Supports various features for preventing failure.

-

-

Scalability

-

Ensures stable system performance even if the number of clients increases.

-

Efficiently changes from a 2-tier model to a 3-tier model using CA (Client Agent).

-

Provides various protocols for legacy systems.

-

Provides services in the web environment using WebT.

-

Provides various protocols for legacy systems such as TCP/IP, SNA, and X.25.

-

Supports various process control methods.

-

-

Flexibility

-

Supports various process control methods.

-

Supports customized features if necessary.

-

-

High Performance

-

Efficiently utilizes system resources.

-

Provides an API to enhance productivity for simple and clear development.

-

Provides the system monitoring feature that is convenient and easy to use.

-

-

Various H/W Platforms

-

Supports for most UNIX systems, Linux systems, Windows NT system, etc.

-

-

Supports for almost all 4GL such as PowerBuilder, Delphi, Visual C/C++, Visual Basic, and .NET (C#, VB)

5. Considerations for Implementing Tmax

5.1. System Requirements

The following describes the system environment for implementing Tmax.

-

System Requirements

Classification Description Protocol

Application API: XATMI, TX

Integrating API: XA

Network: TCP/IP, X.25, and SNA(LU 0/6.2)

OS

Server: UNIX and Linux

Client: UNIX, Linux, Windows, etc.

Platform

All H/W that support UNIX, Linux, and Windows NT

Server Development Language

C, C++, and COBOL

Client Development Language

Interfaces for C, C++, JAVA, and various 4GL (Power Builder, Delphi, Visual C/C++, Visual Basic, .NET(C#, VB) etc.)

DBMS

Oracle, Tibero, DB2, Informix, Sybase, and MQ

-

Server Requirements

Classification Description H/W

Memory: 512 MB or more

Disk: 1000 MB or more

S/W

UNIX, Linux

C, C++, or COBOL Compiler

Network Protocol

TCP/IP

-

Client Requirements

Classification Description H/W

Memory: 512 MB or more

Disk : 100 MB

S/W

Linux, NT, Windows(XP, 7), and UNIX

Power Builder, Delphi, Visual Basic, Visual C++, C, and .NET(C#, VB)

Network Protocol

TCP/IP

5.2. Considerations

When implementing Tmax, several considerations should be taken into account in terms of functionality, performance, and reliability.

-

Functional Considerations

Item Consideration Basic Functions of TP-Monitor

-

Process management

-

Distributed transaction support

-

Load balancing

-

Various communication methods between clients and servers

-

Failure handling (handles and prevents all server failures)

-

Support for Heterogeneous DBMSs

Additional Functionality

-

Security Features

-

System Management

-

Naming Service

-

BP Client Multiplexing

-

System-specific security features

-

Structure array communication support

-

Capability to integrate with host systems

-

-

Other Considerations

Item Consideration Performance

-

Average processing time or maximum transactions per hour

-

Resource Utilization

Reliability (Failure Handling)

-

Customer system failure frequency

-

Failure response capability and time

Training and Technical Support

-

Technical expertise of engineers

-

Training and consulting support (system design consulting, specialized training for application development (OS, network, TP-Monitor))

Risk Management

-

Minimization of trial and error in implementing a new system.

-

System implementation using new technologies

-

Understanding of tasks to be implemented.

Ease of Development and Operation

-

Support for client/server development tool

-

Provision of development drivers

-

System statistics monitoring

-

Dynamic changes of TP-Monitor environment

-

Ease of system operation

-

Reporting features

User Satisfaction

-

Satisfaction with features

-

Satisfaction with technical support and education

-

Satisfaction with product flexibility

Compatibility

-

Compliance with international standards (X/Open DTP, OSI-TP)

-

Independence from specific hardware and DBMS

Company Size

-

Capitalization

-

Number of employees

-

Revenue

-

future growth potential

Others

-

Technology transfer

-

Version upgrade plan

-